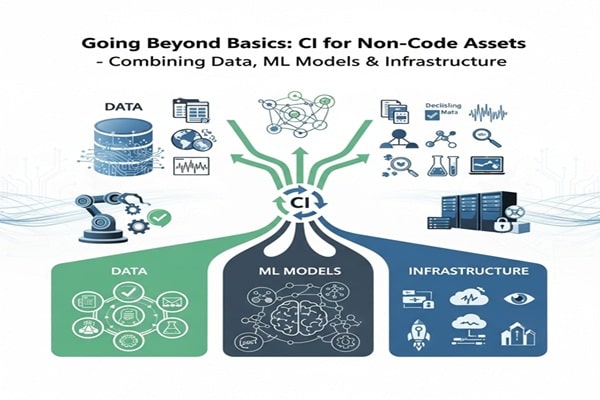

When you think of continuous integration (CI), you probably picture developers rapidly merging and testing lines of code. But here’s the thing—today’s enterprise world has evolved far beyond just code. Data pipelines, machine learning (ML) models, and infrastructure scripts have all joined the digital ecosystem.

Modern businesses aren’t just powered by applications anymore; they’re driven by everything digital—from configuration files to automation scripts. So, if we’re constantly testing and integrating code, shouldn’t we be doing the same for these non-code assets too?

Let’s explore how continuous integration is evolving beyond software—and why it’s the key to smarter, faster, and more reliable enterprise systems.

Redefining What Counts as a “Digital Asset”

In the past, CI pipelines focused solely on application code. But today’s enterprise runs on much more than that.

Think about it:

-

Data pipelines shape business intelligence.

-

ML models drive personalization and automation.

-

Infrastructure-as-code (IaC) scripts define environments.

-

Configuration files control app behavior and performance.

These are no longer side players—they’re core assets that influence everything from user experience to revenue. When they’re left out of the CI process, errors can slip through the cracks and surface late in production, leading to costly downtime or inconsistent performance.

So, the first step is to expand the definition of assets. Modern CI must validate everything—code, data, models, and infrastructure—continuously.

Why Data Needs Continuous Integration

Let’s face it: data is the heart of every modern enterprise. It fuels personalization, analytics, and predictive insights. But data is also volatile—it changes fast and often.

That’s why data CI is becoming a must-have. By integrating data pipelines into CI workflows, teams can automatically:

-

Validate data quality in every environment.

-

Detect schema changes before they cause system errors.

-

Catch data drift and missing values early.

-

Ensure consistent accuracy across staging and production systems.

Data CI ensures that no broken or outdated data sneaks into reports or machine learning models. In data-driven businesses, this isn’t just helpful—it’s mission-critical.

Bringing ML Models into the CI Pipeline

Machine learning models evolve constantly as they learn from new data. But that flexibility introduces risks—what if a retrained model performs worse than before? What if it becomes biased or incompatible with production systems?

That’s where ML continuous integration (ML CI) comes in. By integrating models into CI workflows, companies can:

-

Automatically benchmark each retrained model against the old version.

-

Test for accuracy, drift, and bias before deployment.

-

Validate performance in production-like environments.

-

Maintain version control for full auditability.

This kind of governance ensures that every model update is safe, stable, and high-performing. It’s the perfect blend of automation and accountability.

Infrastructure as Code Joins the CI Revolution

Infrastructure used to be something you manually configured. Now, with Infrastructure as Code (IaC), your servers, networks, and environments are all defined through scripts.

But just like app code, infrastructure code can break things if not tested properly. Imagine a misconfigured variable crashing your production server—ouch.

By integrating IaC scripts into CI pipelines, teams can automatically:

-

Validate provisioning and configuration before rollout.

-

Check for compliance and security issues.

-

Ensure environment consistency across development, testing, and production.

With IaC integrated into CI, infrastructure becomes just as reliable and testable as software.

The Challenges of Testing Non-Code Assets

Of course, expanding CI beyond code isn’t as easy as flipping a switch. Testing non-code assets comes with its own set of hurdles.

Each type of asset requires specialized testing strategies:

-

Data pipelines: Often need synthetic data generation to simulate real scenarios.

-

ML models: Require bias detection, performance validation, and interpretability checks.

-

Infrastructure: Demands mock deployments and automated rollback mechanisms.

These workflows are complex, so modern CI systems need flexible, intelligent testing mechanisms that adapt to multiple asset types—automatically and efficiently.

Also Read : Inside VIPBox: The Wild Frontier of Free Sports Streaming

Security and Compliance in the New CI Landscape

Here’s where it gets even more interesting (and important). Non-code assets—especially data and ML models—introduce serious compliance and security concerns.

Consider the following:

-

Data pipelines might handle sensitive or regulated data.

-

ML models must meet auditability and fairness standards.

-

Infrastructure scripts need strict access control and policy enforcement.

A modern CI pipeline must continuously test for compliance to ensure every update stays within regulatory boundaries.

By using tools that provide real-time alerts, automated policy checks, and access monitoring, enterprises can avoid costly compliance breaches and ensure full transparency across teams.

Making CI Smarter with AI and Automation

The new era of CI isn’t just about expanding scope—it’s about making it intelligent.

AI-driven CI tools are changing the game by:

-

Predicting failures before they happen.

-

Automatically generating test cases based on previous errors.

-

Adapting to new data or configurations in real time.

-

Reducing manual testing effort while improving accuracy.

This next-generation approach—often called Intelligent Continuous Integration—helps enterprises handle the scale and complexity of today’s digital assets with ease.

When your CI pipeline can learn, adapt, and optimize itself, it’s no longer just a process—it’s a competitive advantage.

The Future of CI: Testing Everything, Not Just Code

As businesses continue to digitalize, CI must evolve to keep up. From data validation to model governance and infrastructure testing, automation now plays a critical role in delivering reliable, compliant, and scalable systems.

Companies that embrace this new CI mindset will gain a massive edge—they’ll catch issues early, ship updates faster, and maintain a stronger security posture than their competitors.

But managing such a complex ecosystem isn’t easy without the right tools.

Why Opkey Leads the Future of Continuous Integration

For enterprises looking to integrate, automate, and test non-code assets seamlessly, Opkey stands out as a powerful solution.

Here’s why:

-

AI-Powered Testing: Opkey uses intelligent agents to automate configuration validation, regression testing, and data integrity checks—without writing a single line of code.

-

Cross-Asset Coverage: Whether it’s data pipelines, ML models, or infrastructure scripts, Opkey ensures everything works in sync.

-

End-to-End Visibility: You get complete insight into how updates affect your environment, helping you prevent downtime before it happens.

-

Continuous Compliance: Built-in governance ensures every deployment meets industry standards and audit requirements.

In short, Opkey empowers organizations to move confidently beyond code—into a world of comprehensive digital assurance where every asset, no matter what type, is continuously tested and optimized.

Final Thoughts

Continuous integration is no longer just about developers pushing code. It’s about creating a dynamic, intelligent, and unified testing ecosystem that keeps every part of your digital infrastructure running smoothly.

From data validation to ML model governance, from IaC to compliance—modern CI touches it all. And with tools like Opkey leading the charge, enterprises can embrace this evolution without adding complexity.

The future of CI isn’t just faster—it’s smarter, broader, and more connected than ever before.